The shock of September 11, 2001, still reverberates, a visceral reminder of a day when nearly 3,000 lives were stolen and America’s sense of invulnerability shattered. Yet, as with so many major surprise attacks throughout history, the path to that disaster was paved with clear, unsettling signals—signals that, for various reasons, went unheeded or were fatally mishandled. Understanding these Pre-9/11 intelligence failures and warnings isn't just an academic exercise; it's a critical study in how systems, human nature, and political currents can conspire to create catastrophic blind spots.

When disaster strikes, the immediate question always surfaces: how could we not have known? Whether it's the October 7, 2023, Hamas attack on Israel or the 1941 bombing of Pearl Harbor, history teaches us that "surprise" is rarely a true absence of information, but often a failure to correctly interpret, share, or act upon it.

At a Glance: Key Takeaways from Pre-9/11 Intelligence Failures

- Abundant Warnings: U.S. intelligence agencies, particularly the CIA, had warned of a major Al Qaeda operation throughout the summer of 2001, including a top-secret August President's Daily Brief.

- Critical Information Silos: Two Al Qaeda operatives entering the U.S. months before 9/11 was known by the CIA but critically not shared with the FBI, White House, or local police—a "tragic breakdown."

- Dismissed Red Flags: FBI warnings from 2000 about suspicious Middle Eastern individuals taking flight training were not taken seriously.

- Delayed Translations: Intercepted communications relevant to the plot were only translated after the attacks, on September 12, 2001.

- Historical Parallels: These failures echo those seen at Pearl Harbor (intelligence hoarding), the Yom Kippur War (hubris and dismissed warnings), and even the recent October 7 attack (over-reliance on technical intel, underestimation of the enemy).

- Systemic Issues: Recurring factors include institutional lack of coordination, human biases, insufficient imagination, and political interference overriding objective intelligence.

The Day the World Changed, But the Warnings Were There

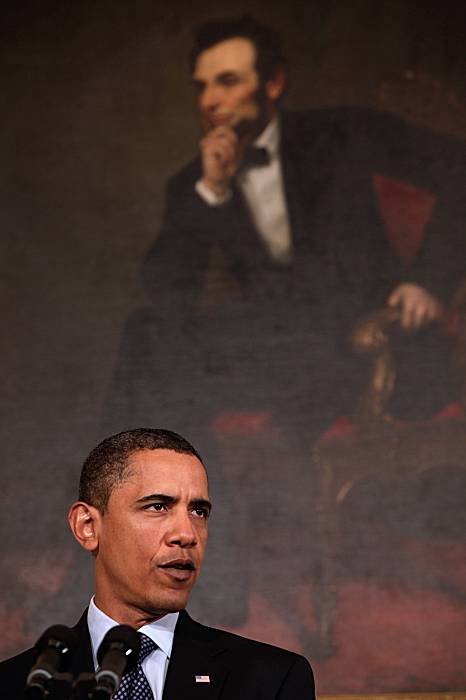

Imagine the summer of 2001. Intelligence analysts across various U.S. agencies were on edge. The Central Intelligence Agency (CIA) specifically issued warnings of a significant Al Qaeda operation brewing. This wasn't a vague hunch; the intelligence community had "lots of information" circulating, enough to prompt a top-secret President's Daily Brief (PDB) in August 2001, alerting President George W. Bush and his senior advisors to the escalating threat.

This PDB was a stark alert, indicating that Al Qaeda was poised for a major operation. Yet, despite this high-level alarm, critical pieces of the puzzle remained unconnected, lying dormant in separate agency files.

The Critical Information Gap: Operatives Inside

Perhaps the most glaring failure—a "tragic breakdown," as it's been described—involved two known Al Qaeda operatives. These individuals had entered the United States months before 9/11. The CIA was aware of their presence. However, this absolutely crucial piece of information was never disseminated beyond the CIA. It didn't reach the FBI, the White House, or local law enforcement agencies. Had this information been shared, allowing the FBI to track these individuals, the entire plot might have unraveled. This single oversight illustrates a profound problem in inter-agency coordination, a persistent challenge in national security.

Ignored Red Flags and Delayed Insights

The intelligence picture wasn't entirely blank at the FBI, either. As early as 2000, the agency had received warnings about suspicious individuals of Middle Eastern descent taking flight training. These warnings, however, were not "taken seriously." The seemingly disparate pieces of information—men with questionable backgrounds focusing on flight schools, specifically learning to fly large aircraft without bothering with landing instruction—should have raised more alarm bells than they did.

Further compounding the issue, crucial intercepted communications related to the impending attacks were not quickly deciphered. In a devastating timeline, these vital messages were only translated on September 12, 2001—the day after the attacks. This delay highlights significant bottlenecks in processing and analyzing intelligence, a challenge that goes beyond mere collection.

Then-National Security Advisor Condoleezza Rice later defended the administration's actions, stating that the threat reports in 2001 lacked specificity regarding time, place, or manner. She also noted that much of the intelligence focused on Al Qaeda activities outside the U.S., implying a failure of imagination regarding the enemy's intent and capability to strike within America's borders. It's a defense that underscores the challenge of predicting the precise form a threat will take, even when the threat itself is clearly identified. The cumulative effect of these failures contributed directly to the horrific events that unfolded, demonstrating how The Bush administration and 9/11 would forever be linked.

A Familiar Echo: History's Unheeded Calls

While 9/11 stands as a uniquely painful chapter, the underlying intelligence failures are not isolated incidents. History is replete with examples where formidable intelligence capabilities were bypassed, misunderstood, or simply ignored, leading to catastrophic surprise attacks.

Pearl Harbor: A Precedent in Silos

Decades before 9/11, the surprise attack on Pearl Harbor in 1941, which drew the U.S. into World War II, shared a similar pattern of unshared intelligence. Indications clearly pointed to Pearl Harbor as a likely target. Yet, crucial intelligence was not shared with the White House. The reason? A "misguided view" that the interception capability itself was too precious to share widely, fearing Japanese discovery. This meant safeguarding the source of information took precedence over disseminating the content of the warning, a decision with devastating consequences.

Yom Kippur: The Peril of Hubris

In 1973, Israel, renowned for its intelligence prowess, was caught completely off guard by a coalition of Arab states led by Egypt and Syria in what became known as the Yom Kippur War. Despite warnings from various sources, including a secret alert from King Hussein of Jordan to Prime Minister Golda Meir, and even multiple warnings from an incredibly well-placed source, Ashraf Marwan (son-in-law of Egypt's former President), Israel dismissed the intelligence. Their "supreme confidence" and "hubris" following their resounding victory in the 1967 Six-Day War led them to believe another war was impossible. This overconfidence blinded them to clear threats, highlighting how internal biases can distort the perception of external realities. This illustrates a critical flaw in effective intelligence analysis.

Iraq War: When Politics Trumped Intel

Intelligence can also be overridden by political or ideological aims. In 2003, the U.S. President George W. Bush administration was convinced Iraq possessed weapons of mass destruction (WMDs). This conviction persisted despite a lack of empirical evidence found by UN inspectors and contrary advice from the U.S. intelligence community. The input from the "$US80 billion per year US intelligence community" was "pushed aside" by ideological preferences to overthrow Saddam Hussein. Here, the failure wasn't a lack of warning, but a deliberate disregard for intelligence that didn't align with predetermined political objectives.

October 7, 2023: The Latest Chapter in Surprise

The recent Hamas attack on Israel on October 7, 2023, tragically reiterated many of these historical patterns. Despite Israel's formidable intelligence capabilities, the scale and ferocity of the attack were a complete surprise. Hamas employed drones to disable cameras and guard posts, and bulldozers to breach Gaza's border fences. A key failure emerged from Israel's focus on "technical intelligence collection"—sensors, surveillance, signal intercepts—over "good old-fashioned human spying," resulting in inadequate infiltration of Hamas.

Clear warning signs were missed, including Hamas building a full-scale "mock replica" of an Israeli town and practicing attacks for weeks. Prime Minister Benjamin Netanyahu initially claimed security officials believed "Hamas was deterred and interested in an arrangement" (a post he later deleted), suggesting an entrenched assumption that blinded decision-makers to reality. This echoes the "supreme confidence" seen during the Yom Kippur War and underscores the critical need for a balanced approach to human and technical intelligence sources.

Dissecting the Dysfunction: Why Intelligence Fails

Why do these failures recur? The patterns are strikingly similar across different eras and geographies, pointing to fundamental challenges within intelligence gathering and analysis.

The Silo Effect: Information That Never Connects

One of the most persistent problems, highlighted starkly by the Pre-9/11 non-sharing of operative information, is the "silo effect." Agencies, often due to bureaucratic inertia, competition, or an overly protective stance on their unique capabilities, fail to share critical data. This was evident at Pearl Harbor, where the secrecy of interception capabilities overshadowed the urgent need to disseminate warnings. When vital pieces of intelligence remain isolated, no single entity can form a complete picture, leading to collective blind spots.

The Human Element: Bias, Blind Spots, and Imagination Gaps

Intelligence, at its core, is a human endeavor. This means it's susceptible to human limitations:

- Cognitive Biases: Analysts and decision-makers often fall prey to confirmation bias, seeking information that confirms existing beliefs and dismissing contradictory evidence.

- Lack of a "Crystal Ball": As the 9/11 defense by Condoleezza Rice highlighted, intelligence is rarely precise about "time, place, or manner." The future is inherently uncertain, and expecting perfect predictions is unrealistic.

- Insufficient Imagination: Countries and intelligence agencies frequently prepare based on past enemy behaviors. This makes it incredibly difficult to anticipate new tactics, strategies, or levels of audacity, as seen with Hamas's innovative use of drones and bulldozers, or Al Qaeda's unprecedented use of commercial airliners as weapons.

- "Fixing" the Intelligence: Sometimes, intelligence is not just misinterpreted, but actively "fixed" or spun to support a predetermined agenda. This was explicitly identified in the lead-up to the 2003 Iraq War, where objective intelligence was "pushed aside" by political preferences. When policymakers start with a conclusion and search for intelligence to support it, the entire system breaks down. This erodes trust and undermines the very purpose of an independent intelligence community.

Political Pressure: The Erosion of Objectivity

When intelligence is "pushed aside" by ideological or political aims, as was the case with the 2003 Iraq War, the system fails spectacularly. Leaders may pressure intelligence agencies to deliver findings that support a desired policy outcome, rather than objective assessments. This politicization compromises the integrity of intelligence and leads to decisions based on conviction rather than fact.

Overconfidence and Underestimation

The Yom Kippur War is a powerful testament to the dangers of "supreme confidence" and "hubris." When an intelligence apparatus becomes too reliant on its past successes or assumes an enemy is "deterred" (as suggested by Netanyahu's initial remarks post-Oct 7), it creates a dangerous complacency. Underestimating an adversary's capabilities, motivations, or willingness to take risks is a common precursor to surprise attacks. This extends to misjudging an enemy's type of attack, focusing on conventional threats while ignoring asymmetric ones.

The Lure of the Technical Over the Human

The October 7 attack underscored another critical imbalance: an over-reliance on technical intelligence collection at the expense of human intelligence (HUMINT). While signals intelligence, satellite imagery, and cyber monitoring are invaluable, they often miss the human element—the intent, the plans, the morale, and the detailed operational specifics that only well-placed human sources can provide. Inadequate infiltration of enemy groups means critical ground-level warnings are missed, leaving a gaping hole in the intelligence picture. This is where human intelligence can be invaluable for anticipating unconventional threats.

The Road Ahead: Building Resilient Intelligence

Learning from Pre-9/11 intelligence failures and subsequent events isn't about assigning blame but about building more robust, adaptable, and self-correcting intelligence systems.

Fostering a Culture of Sharing

The "tragic breakdown" of information sharing pre-9/11 demands a permanent shift towards proactive, mandatory, and seamless inter-agency communication. This means:

- Breaking Down Silos: Implementing clear protocols and technological platforms for secure, real-time information exchange between agencies (CIA, FBI, NSA, DHS, local law enforcement).

- "Need-to-Share" vs. "Need-to-Know": Shifting the default from withholding information unless explicitly requested, to sharing widely unless there's a compelling reason not to.

- Joint Task Forces: Encouraging integrated teams where analysts and agents from different agencies work side-by-side on specific threats, fostering trust and direct communication.

Embracing Diverse Perspectives

To combat cognitive biases and imagination gaps, intelligence agencies must actively cultivate diverse perspectives and challenge assumptions.

- "Red Teaming": Regularly employing "red teams" whose sole purpose is to challenge conventional wisdom, play the adversary, and explore "worst-case" or unconventional scenarios.

- Devil's Advocate Roles: Establishing institutionalized roles for analysts to argue against prevailing assessments.

- Open Source Intelligence (OSINT): Incorporating a broader range of information, including publicly available data, to provide context and challenge internal narratives. This helps broaden the base for comprehensive threat assessments.

Rebalancing Intel Collection

The pendulum swings between technical and human intelligence. A truly resilient system requires a balanced approach:

- Investing in HUMINT: Renewed focus on recruiting, training, and protecting human assets, especially in hard-to-penetrate organizations. This means a long-term investment that acknowledges the risks but also the unparalleled insights HUMINT can provide.

- Integrating All-Source Analysis: Ensuring that signals intelligence, imagery intelligence, open-source intelligence, and human intelligence are not just collected but thoroughly integrated and cross-referenced by analysts.

Insulating Analysis from Politics

Maintaining the independence and objectivity of intelligence analysis is paramount.

- Clear Lines of Authority: Establishing clear boundaries between intelligence analysis and policy advocacy.

- Protecting Dissent: Creating an environment where dissenting analytical opinions are encouraged, documented, and given due consideration, rather than suppressed.

- Education for Policymakers: Providing policymakers with a clear understanding of the limitations and uncertainties inherent in intelligence, helping them interpret assessments realistically.

Guarding Against Tomorrow's Blind Spots

The tragedy of Pre-9/11 intelligence failures, and the echoes heard in events like Pearl Harbor, the Yom Kippur War, and the October 7, 2023, attack, serve as a perpetual reminder. Intelligence is never perfect, and the enemy always adapts. The goal isn't to achieve infallibility, but to cultivate a system that is constantly learning, questioning, and striving for greater integration and imagination.

Ultimately, the fight against future surprises hinges on a commitment to transparency within the intelligence community, a willingness to scrutinize comfortable assumptions, and the courage to act on warnings, however vague or inconvenient they may seem. It demands a leadership that values objective truth over political expediency, and an operational culture that prioritizes the shared national interest above individual agency turf. Only then can we hope to minimize the "tragic breakdowns" that pave the road to disaster and better protect ourselves from the threats lurking in tomorrow's shadows.